SNMP forwarding on Rocks Cluster

Posted on August 13, 2018 • 4 minutes • 716 words • Suggest Changes

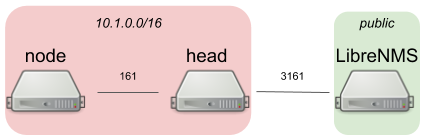

Rocks distro is a cluster system. It comes with SNMP configured out of the box. It is polled using Ganglia. Which is working nicely, but I like to have all SNMP data in my favorite SNMP system, LibreNMS. Changing the SNMP configuration to be able to poll from LibreNMS should be a rather straight forward process, however those nodes have no connection to the public network. They have a private VLAN to talk to the head-node and a private VLAN to communicate with the storage array. So to get the SNMP data to Librenms we will have to get crafty with Iptables to get this data to LibreNMS on the public net.

Configuration

First let’s check the _/etc/snmp/snmpd.conf _file from the _Rocks _installation :

com2sec notConfigUser default public group notConfigGroup v1 notConfigUser group notConfigGroup v2c notConfigUser view all included .1 80 access notConfigGroup "" any noauth exact all all all

This config is a bit complex and I figure I won’t go back, so I commented it. I decided not to remove it completely, since I don’t want to break the possibility to go back to Ganglia should it be an important system in Rocks. (note : it’s not) I added :

# this create a SNMPv1/SNMPv2c community named "my_servers" # and restricts access to LAN adresses 192.168.0.0/16 (last two 0's are ranges) rocommunity my_servers <our_public_net>/16 rocommunity my_servers 10.1.0.0/16 # setup info syscontact "svennD" # open up agentAddress udp:161 # run as agentuser root # dont log connection from UDP: dontLogTCPWrappersConnects yes

Important here is, that I added two IP ranges, I’m not sure if the private VLAN (10.1.0.0/16) is even required, but since traffic is going over those devices I just added it.

Next thing is setting up the Iptables on the head-node. Since _Rocks _is already pretty protective (good !) I had to add an extra rule to even allow SNMP polling from the device :

-A INPUT -p udp -m udp --dport 161 -j ACCEPT

Allow the head-node to be polled by LibreNMS by accepting incoming UDP packets over port 161.

To receive packets send from the node on port 161 to the head-node, but forward this to port 3161 externally to LibreNMS (circumventing most known ports and the REJECT rule in Rocks for port 1-1023.) can be done with prerouting rule :

-A PREROUTING -i eth1 -p udp -m udp --dport 3161 -j DNAT --to-destination 10.1.255.244:161 -A POSTROUTING -o eth1 -j MASQUERADE

Note : 10.1.255.244 is the private IP of the node.

So from now on packets should come in, this can be checked using tcpdump, which came in handy during the debugging of this project : (on the node)

tcpdump 'port 161'

To be able to let _snmpd _answer we needed the information to be forwarded on the head-node, this can be done with a forward rule :

-A FORWARD -d 10.1.255.244/32 -p udp -m udp --dport 161 -m state --state NEW,RELATED,ESTABLISHED -j

note : again 10.1.255.244 is the ip of the node.

Surprisingly the node was unable to answer to the incoming requests. This was due to the fact that, the default route (_route -n) _was pointing towards one of the storage servers. To add a default gateway we can add it using route :

route add default gw 10.1.1.1 eth0

note : 10.1.1.1 is the private VLAN ip of the head-node.

Conclusion

Bam! LibreNMS can talk to the node using the public IP of the head-node on port 3161 and to the head-node on port 161. One issue that remains unsolved is on reboot, this setup is lost. Rocks by default will reinstall nodes that reboot. This can be resolved by adapting the configurations on _Rocks _and rebuild the distribution (rocks create distro). However this is rather advanced and (IMHO) difficult to debug. So I did not use that system for this project. Another problem is that its rather work-intense to add all the configuration to all the nodes. (this is only for a single node) This can be resolved most easily using scripts and using rocks run host to execute bits on all the nodes. I decided that I only want one node to be polled as a sample. I already track opengridscheduler using an extend on the head-node. So this is mostly for debugging. Good luck !

Buy me a Dr Pepper

Buy me a Dr Pepper